Faster, cheaper, more controllable – and still powerful: Small Language Models are conquering the enterprise space.

While the world obsesses over GPT-5 and ever-larger models, something exciting is happening in the background: Small Language Models (SLMs) are evolving rapidly and becoming a real alternative for enterprise applications. In our latest webinar, we showed why – and demonstrated our own fine-tuned models live.

The Problem with the Big Ones

80-95% of all corporate AI projects fail. A sobering number that keeps making headlines. But why?

A major reason: Large language models like ChatGPT or Claude are often problematic for enterprise use. OpenAI recently switched off all legacy model variants when releasing GPT-5 – a nightmare for any corporate IT with running processes. Add data privacy concerns, unpredictable behavior, and dependency on American cloud services to the mix.

Small but Mighty: The Advantages of SLMs

Small Language Models (typically 1-20 billion parameters) offer tangible benefits:

⚡ Speed: Responses in milliseconds instead of multi-second waits. Once you’ve experienced the responsiveness of a local SLM, there’s no going back.

🔒 Privacy: Runs on your own servers, needs no internet connection, no data leaves your premises. Ideal for sensitive corporate data.

🎯 Control: No surprise model updates, no sudden behavioral changes. The model does exactly what it’s supposed to do.

💰 Cost: Significantly cheaper to operate than API calls to major providers.

🔧 Customizability: Through fine-tuning, SLMs can be precisely trained for specific tasks – with manageable effort.

The Secret Sauce: LoRA Fine-tuning

The game-changer is called LoRA (Low-Rank Adaptation). This technique makes it possible to customize models with surprisingly little data (from ~100 examples) and compute power. The principle: You only train a small “adapter” that’s layered over the model weights – no retraining of the entire model required.

The result? A model that not only gives the right answers but responds in exactly the right style. Anyone who’s tried to get ChatGPT to give shorter answers or avoid certain formatting through prompting alone knows how difficult that is. With fine-tuning, it works reliably.

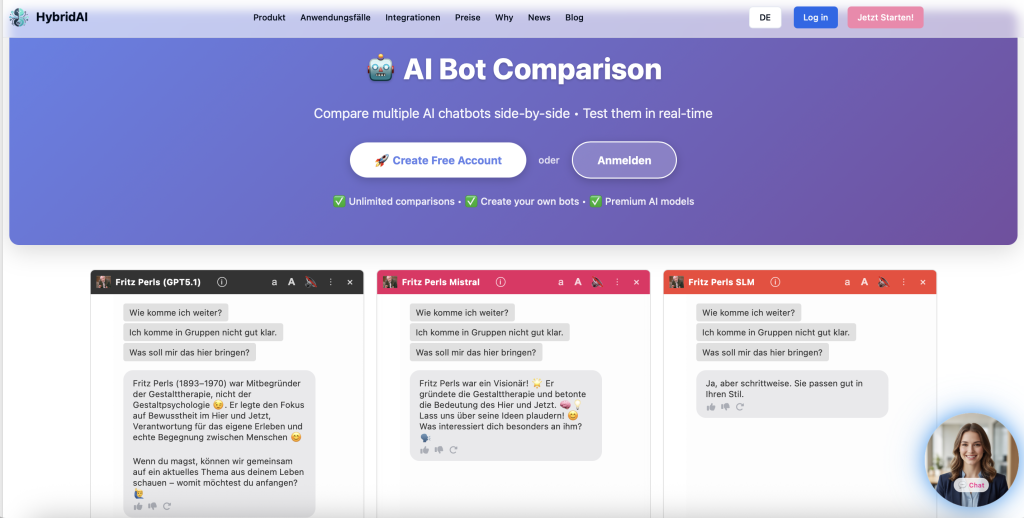

Live Demo: Our Own SLMs

In the webinar, we showed three fine-tuned models, all based on LiquidAI’s LFM-2 with just 1.2 billion parameters:

- General German Model: Solid answers to everyday and technical questions

- Fritz Perls Therapy Bot: A model that perfectly imitates the confrontational conversation style of Gestalt therapist Fritz Perls

- Market Research Association Model: Analyzes implicit brand associations in professional market research style

The responsiveness is impressive – answers come practically instantly. And the best part: Everything runs on our own European servers.

The Future: Hybrid is King

Our vision at HybridAI: It’s all about the combination. Small, fine-tuned models for routine tasks, large models for complex queries – orchestrated by an intelligent control layer that recognizes which model is right for each situation.

This gives enterprises the best of both worlds: Fast, controllable, privacy-compliant answers for 80% of queries – and the power of large models when truly needed.

Want to Try It Yourself?

We’re making our SLM demo publicly available. Test for yourself how the small models perform – and contact us if you’d like to discuss custom fine-tuned models for your use cases.