Yes, in the current AI hype discourse this statement almost feels like suicide, but I want to briefly explain why we at HybridAI came to the conclusion not to set up or use an MCP server for now.

MCP servers are a (currently still “desired”) standard developed and promoted by Anthropic, which is currently gaining a lot of traction in the AI community.

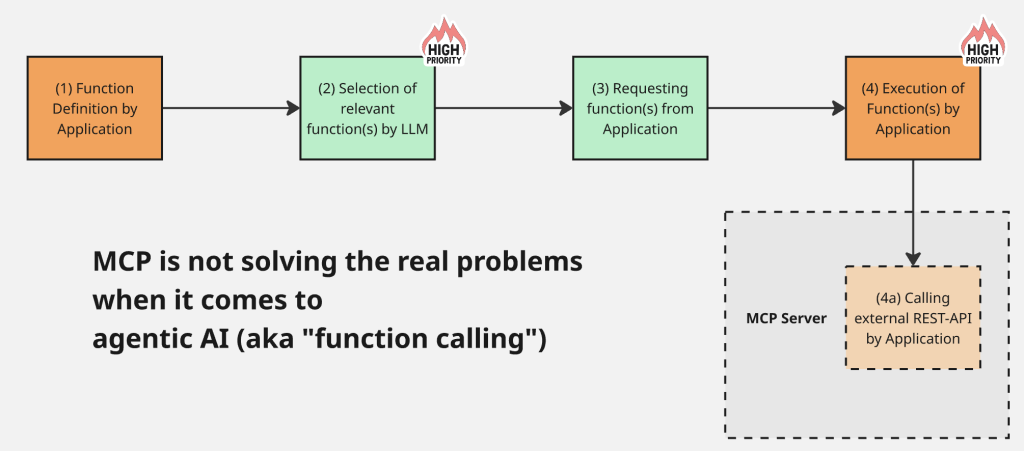

An MCP server is about standardizing the tool calls (or “function calls”) that are so important for today’s “agentic” AI applications – specifically, the interface from the LLM (tool call) to the external service or tool interface, usually some REST API.

With the current ChatGPT image engine generated – I love these trashy AI images a little and will miss them…

At HybridAI, we have long relied on a strong implementation of function calls. We can look back on a few dozen implemented and production-deployed function calls, used by over 450 AI agents. So, we have some experience in this field. We also use N8N for certain cases, which adds another relevant layer in practice. Our agents also expose APIs to the outside world, so we know the problem in both directions (i.e., we could both set up an MCP server for our agents and query other MCPs in our function calls).

So why don’t I think MCP servers are super cool?

Simple: they solve a problem that, in my opinion, barely exists and leave the two much more important problems of function calls and agentic setups unsolved.

First: Why does the problem of needing to standardize foreign tool APIs hardly exist? Two reasons. (1) Existing APIs and tools usually have REST APIs or similar, meaning they already use a standardized interface. These are quite stable, which you can tell from API URLs still using “/v1/…” or “/v2/…”. They remain stable and accessible for a long time. Older APIs are often still relevant – like those of the ISS, the European Patent Office, or some city’s Open Data API. These services won’t offer MCP interfaces anytime soon – so you’ll have to deal with those old APIs for a long time. (2) And this surprises me a bit given the MCP hype: LLMs are actually pretty good at querying old APIs – better than other systems I’ve seen. You just throw the API output into the LLM and let it respond. No parsing, no error handling, no deciphering XML syntax. The LLM handles it reliably and fault-tolerantly. So why abstract that with MCP?

In reality, MCP adds another tech layer to solve a problem that isn’t that big in daily tool-calling.

The bigger issues are:

–> Tool selection

–> Tool execution and code security

Tool selection: Agentic solutions work by allowing multiple tools, sometimes chained sequentially, with the LLM deciding which to use and how to combine them. This process can be influenced with tool descriptions – small mini-prompts describing functions and arguments. But this can get messy fast. For example, we have a tool call for Perplexity when current events are involved (“what’s the weather today…”), but the LLM calls it even when the topic is just a bit complex. Or it triggers the WordPress Search API, though we wanted GPT-4.1 web search. It’s messy and will get more complex with increased autonomy.

Tool execution: A huge issue for scaling and security is the actual execution of tool code. This happens locally on your system. Ideally, at HybridAI, we’d offer customers the ability to submit their own code, which would be executed as tool calls when the LLM triggers them. But in terms of code integrity, platform stability, and security, that’s a nightmare (anyone who submitted a WordPress plugin knows what I mean). This issue will grow with more use of “operator” or “computer use” tools – as those also run locally, not at OpenAI.

For these two issues, I’d like ideas – maybe a TOP (Tool Orchestration Protocol) or a TEE (Tool Execution Environment). But hey.