Update 2026: As of now, we also support MQTT sensor data. More importantly, we have connected our IoT sensor infrastructure to our BI solution. This means that data can now not only be read and reported, but also analyzed and evaluated in a multi-dimensional way.

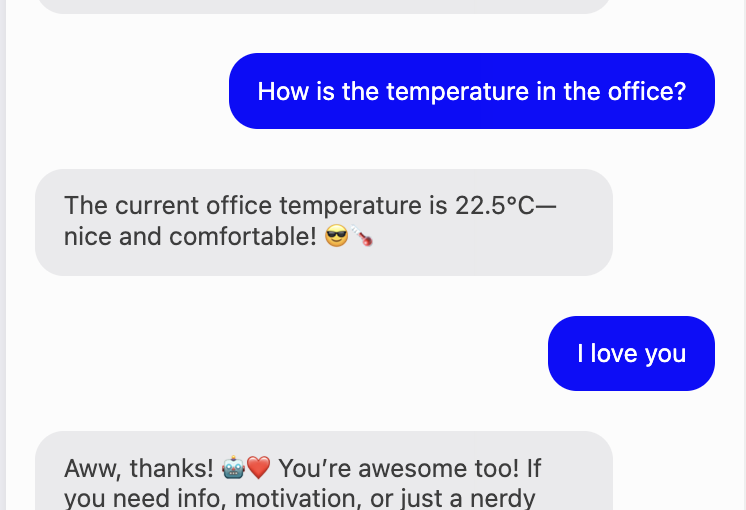

We’re excited to introduce a powerful new feature on our platform: the ability to stream IoT sensor data directly into your chatbot’s context window. This isn’t about triggering an external API tool call—it’s about augmenting the bot’s real-time understanding of the world.

How it works

IoT sensors—whether connected via MQTT, HTTP, or other protocols—can now send live data to our system. These values are not fetched on-demand via function calls. Instead, they’re continuously injected into the active context window of your agent, making the data instantly available for reasoning and conversation.

Real-World Use Cases

🏃♂️ Fitness and Weight Loss

A health coach bot can respond based on your real-time activity:

“You’ve already reached 82% of your 10,000 step goal—great job! Want to plan a short walk tonight?”

Or reflect weight trends from smart scales:

“Your weight dropped by 0.8 kg since last week—awesome progress! Should we review your meals today?”

⚡️ E-Mobility and Charging

A mobility assistant knows your car’s charging state:

“Your battery is at 23%. The nearest fast charger is 2.4 km away—shall I guide you there?”

Bots can also keep track of live station availability and recommend based on up-to-date infrastructure status.

🏗 Accessibility and Public Infrastructure

A public-facing city bot could say:

“The elevator at platform 5 is currently out of service. I recommend using platform 6 and taking the overpass. Need directions?”

Perfect for people in wheelchairs or with limited mobility.

🏭 Smart Manufacturing and Industry

A factory assistant can act on process data:

“Flow rate on line 2 is below target. Should I trigger the maintenance routine for the filter system?”

This allows for natural language monitoring, error detection, and escalation—all in real time.

What Makes This Different?

🔍 Contextual Awareness, Not Tool-Calling

Sensor data is part of the active reasoning window—not fetched via a slow external call, but immediately available to the model during inference.

🤖 True Multimodal Awareness

Bots now reason not just over language but also over live numerical signals—physical reality meets LLM intelligence.

🚀 Plug & Play Integration

Bring your own sensors: from wearables to factory machines to public infrastructure. We help you connect them.

In Summary

This new feature unlocks unprecedented potential for intelligent agents—combining the power of conversational AI with a live, evolving understanding of the physical world. Whether you’re building a wellness coach, a mobility assistant, or an industrial controller, your agent can now think with real-world data in real time.

Reach out if you’d like to get started!