Over the past few months, we’ve witnessed a real boom in AI applications – from ChatGPT to Copilot and specialized enterprise solutions. A question that keeps coming up: How can I intelligently connect my existing Business Intelligence data with AI?

The answer is simpler said than done. While AI excels at handling unstructured text, structured databases present a unique challenge.

The Problem: Structured Data Meets AI

Why RAG Isn’t the Solution

The classic RAG approach (Retrieval Augmented Generation) works brilliantly for documents, PDFs, or knowledge bases. Text is converted into vectors and searched semantically.

But: BI data in SQL databases is structured. It thrives on:

- Precise aggregations (SUM, AVG, COUNT)

- Complex JOINs across multiple tables

- WHERE conditions with exact values

- GROUP BY for groupings

A vector search over your revenue table will never match the precision of a SQL query. RAG is simply the wrong tool here.

Why Simple SQL Tool Calls Are Too Limited

The next thought: “Let’s just give the LLM an SQL tool!”

The problem with that:

- Lack of context continuity: For every question, the model must re-understand the entire schema context

- No iteration: Complex analyses require multiple cascading queries

- Reasoning overhead: The conversational model must simultaneously write SQL AND provide clever answers

- Prompt collision: SQL syntax and natural conversation compete for context space

A model that’s supposed to do both – SQL and conversation – won’t do either particularly well.

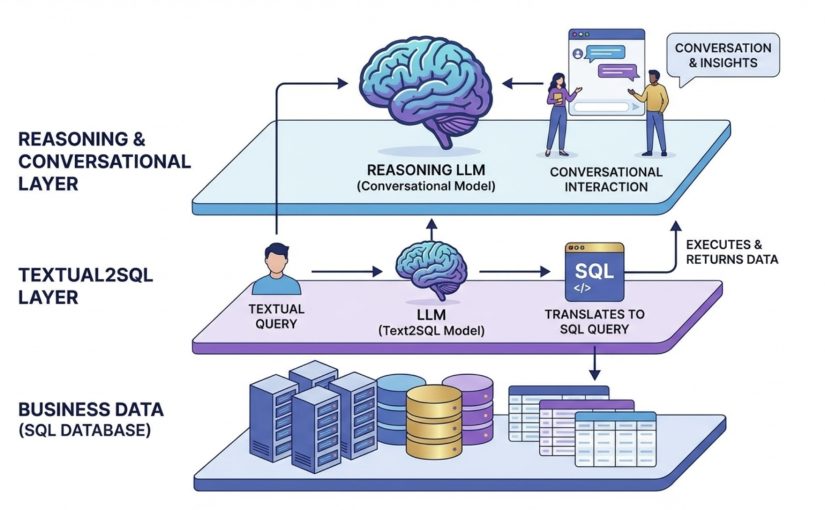

The Solution: A Two-Layer Approach

The elegant solution lies in specialization through layering. Instead of having one model do everything, we split the work between two specialized LLMs:

Layer 1: Text2SQL – The Data Translator

The first LLM has a single task: convert textual queries into precise SQL.

Benefits of this specialization:

- Focuses solely on schema understanding and SQL syntax

- Can be fed with extensive database context

- No “distraction” from conversational requirements

- Smaller, faster model possible (e.g., GPT-3.5, Claude Haiku)

Workflow:

- User asks: “How much revenue did we make in Q4?”

- Text2SQL LLM translates to:

SELECT SUM(revenue) FROM sales WHERE quarter = 4 - Query is executed, data comes back

Layer 2: Reasoning LLM – The Conversation Partner

The second, higher-level LLM is your intelligent analyst. It:

- Conducts the conversation with the user

- Decides what data is needed

- Calls the Text2SQL LLM as a tool

- Interprets the data and draws conclusions

- Asks follow-up questions and conducts multi-turn analyses

Example Dialog:

User: “How is our revenue developing?”

Reasoning LLM thinks:

→ Need revenue data for recent quarters

→ Calls Text2SQL: “Revenue per quarter last 12 months”

→ Receives data: Q1: 1.2M, Q2: 1.5M, Q3: 1.8M, Q4: 2.1MReasoning LLM responds:

“Your revenue shows a clear upward trend with +75% growth over the last 4 quarters. Q4 was particularly strong with +16% vs Q3.”User: “What’s driving that?”

Reasoning LLM thinks:

→ Need breakdown by product category Q4

→ Calls Text2SQL

→ Analyzes and responds: “The main drivers were…”

Why This Approach Is Superior

1. Separation of Concerns

Each LLM does what it does best:

- Text2SQL: Precise SQL generation

- Reasoning: Intelligent analysis and conversation

2. Better Performance

- Smaller, faster models possible for Text2SQL

- Less context-switching

- Parallel optimization of both layers

3. Higher Quality

- Text2SQL can be trained with detailed schema knowledge

- Reasoning LLM focuses on insights, not syntax

- Less “prompt pollution”

4. Easier Maintenance

- Schema changes? Only adjust Text2SQL

- Improve conversational style? Only adjust reasoning prompts

- Clear responsibilities

5. Better Error Handling

- SQL errors can be caught by the Text2SQL layer

- Reasoning LLM can ask alternative questions

- Graceful degradation possible

Implementation in Practice

At HybridAI, we implement exactly this approach for our clients:

- Text2SQL Layer: A specialized model familiarized with your database schema

- Reasoning Layer: Claude or GPT-4 for natural conversations about your data

- Security: Row-level security and access control at DB level

- Caching: Frequent queries are cached for faster responses

The result: Your employees can speak with your BI data in natural language – precisely, quickly, and intelligently.

Conclusion

Connecting AI with structured BI data is not a trivial task. Neither RAG nor simple SQL tools are sufficient.

The solution lies in intelligent division of labor: A specialized Text2SQL LLM translates queries into precise SQL, while a higher-level Reasoning LLM conducts the conversation and generates insights.

This two-layer approach combines the best of both worlds: The precision of structured queries with the flexibility of natural conversation.

Want to enhance your BI data with AI? At HybridAI, we support you in implementing intelligent data analysis solutions. Contact us for a non-binding conversation.