Everyone’s talking about AI in accounting now. The consultants have their slides ready. The vendors are rebranding their invoice OCR tools. LinkedIn is full of posts about “How I automated my finance tasks – comment FINANCE to get my n8n workflow.” Wrappers everywhere.

Here’s my honest take after working on the technical AI side of this stuff for a while: AI will absolutely change accounting. But not in the way most people think, and probably not as fast as the headlines suggest.

The terminology mess

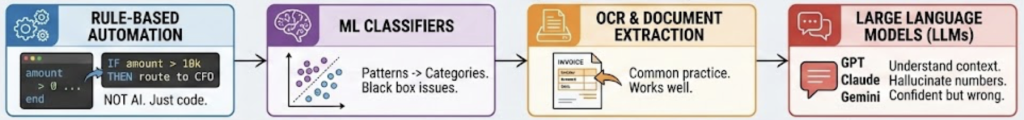

Let’s start with the basics, because the language around this is a disaster. When people say “AI in accounting,” they could mean any of the following:

Rule-based automation: If invoice amount > 10,000, route to CFO. If “Intra-community supply under § 4 1b”, then “VAT_00”. Not AI. Just code with a marketing budget.

Machine learning classifiers: Trained models that categorize transactions based on patterns. These are actually useful and have been around for years. But they often don’t generalize well and are hard to keep up to date, because often they’re a black box.

OCR and document extraction: Reading invoices and pulling out vendor names, amounts, dates. This is common practice now. And in some instances it actually works.

Large Language Models: Our favorite brand new toy. GPT, Claude, Gemini. Can understand context, interpret messy inputs, handle edge cases. But also: can hallucinate numbers with absolute confidence.

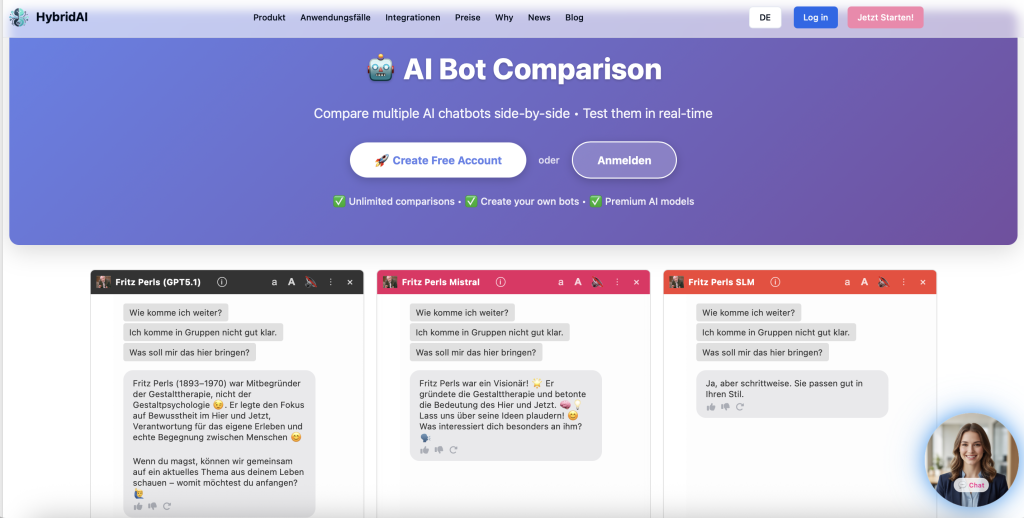

Most “AI accounting” products today are really just ML classifiers with an LLM-powered chatbot stapled on top. Which is fine, but let’s be honest about what we’re dealing with.

Where AI already works today

Behind all the marketing talk, there are real wins happening right now:

Document capture and extraction. Modern systems can read invoices in any format, any language, any level of scan quality. The combination of vision models and LLMs has basically solved this problem. You still need human review for edge cases, but 80-90% straight-through processing is achievable. And if you get an invoice in a new format, it still works. Because AI.

Transaction categorization. For standard cases, ML models are excellent at learning your chart of accounts and applying it consistently. They don’t get tired on Friday afternoons. They don’t have “creative” interpretations of cost centers. Also: don’t forget that AI doesn’t necessarily means LLMs. We also have an awesome family of sub 1b parameter encoder only models that are wonderful at classification.

Anomaly detection. Spotting duplicate invoices, unusual amounts, vendors that suddenly changed bank details. Pattern recognition at scale is exactly what ML does well. This is genuinely useful for fraud prevention and audit prep.

Natural language queries. “Show me all marketing expenses over 50k last quarter” without writing SQL. This works now. It’s not magic, but looks like magic and really saves time. Why not chat with your business data 😉

The common thread? These are all tasks where being approximately right most of the time is valuable, and where humans can easily verify the output.

Where things get interesting (and dangerous)

Now for the hard part.

The moment you need AI to make a decision that has legal or tax implications, everything changes. Consider VAT determination on an incoming invoice. Sounds simple: it’s 19%, right?

Except when it’s not. Is the supplier in another EU country? Is this a service or a good? Does reverse charge apply? Is it construction-related (§13b in Germany)? Is the supplier even VAT-registered? Is it a triangular trade? Is there a pandemic with special vat rates?

I’ve written about this specific problem with tax codes in SAP before. The short version: there are dozens of edge cases, and getting it wrong means audit findings, back taxes, and possibly fraud allegations.

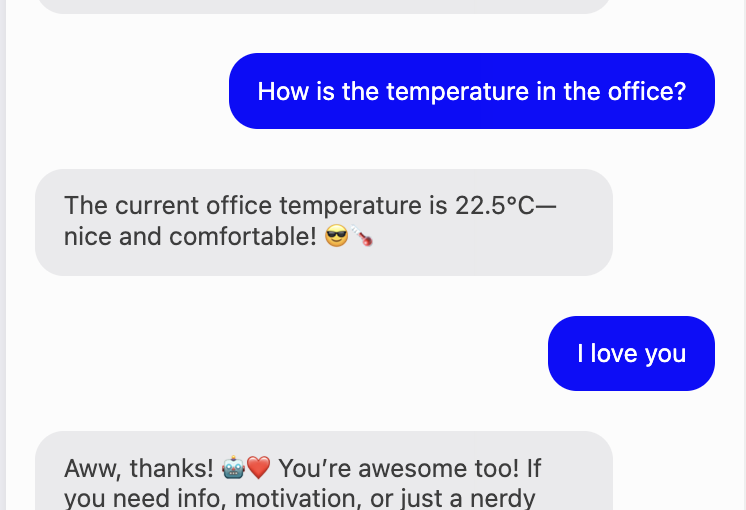

Here’s the uncomfortable truth: LLMs are very good at explaining what reverse charge is. In academic voice or as a sonnet. But they’re dangerously unreliable at determining whether a specific invoice should use it. The difference matters.

The hallucination problem is real. An LLM will confidently tell you that this invoice clearly qualifies for intra-community supply treatment. It might even cite the relevant EU directive. It might also be completely wrong, because it didn’t notice the supplier has a German VAT ID, or because the goods never actually left the country. I ran a couple of examples through different LLMs – and they were very opinionated about certain things. But not necessarily right. So right now, we’re creating a VATBench to get a better view of this.

When Claude or GPT makes a mistake in a creative writing task, you get a weird sentence. When it makes a mistake in tax determination, you get a six-figure assessment in your next audit.

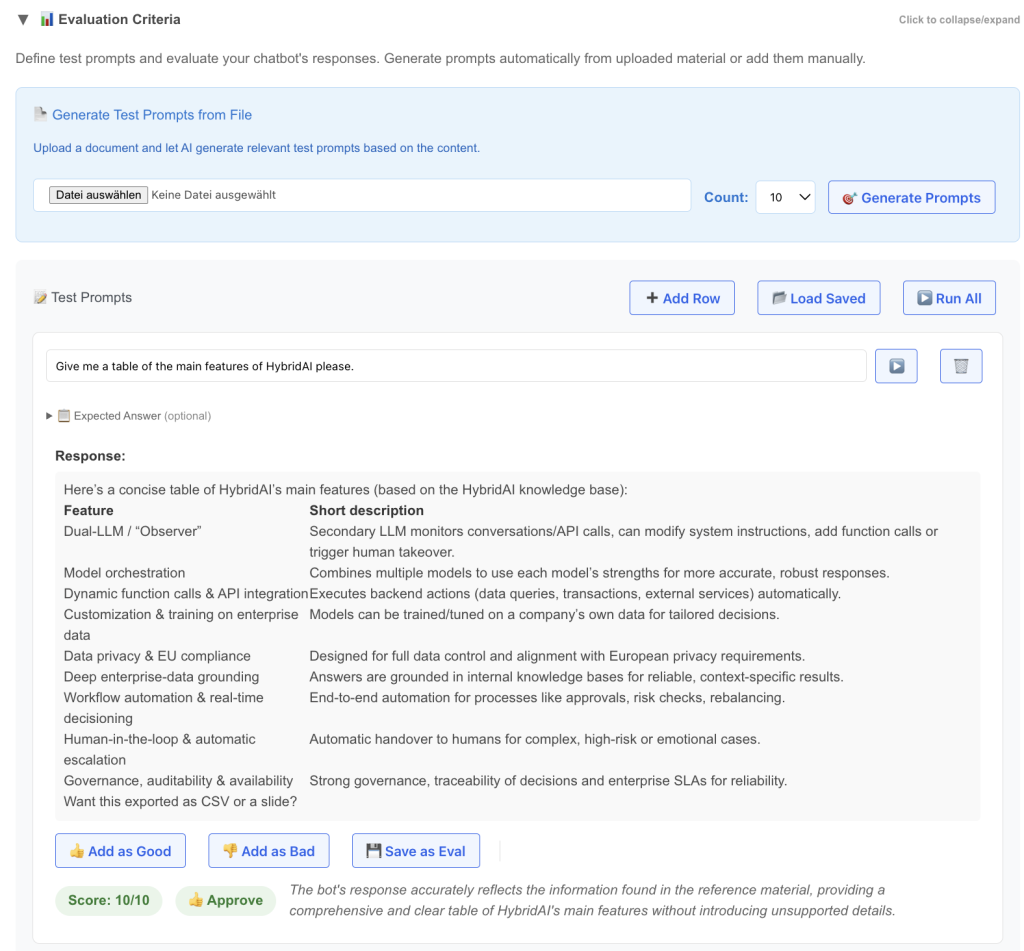

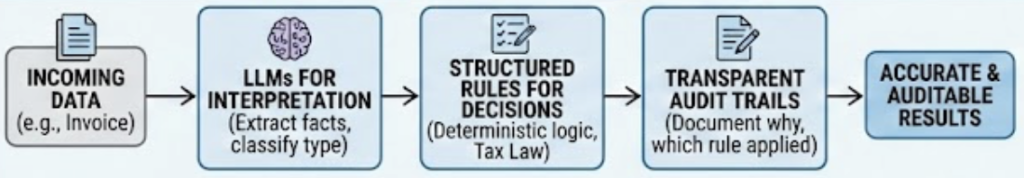

The hybrid AI architecture that actually works

So where does this leave us? Not with “AI bad, humans good.” The answer is architectural. A pattern that really works magic combines three things:

LLMs for interpretation. Let the language model read the invoice, extract the relevant facts, classify the transaction type, identify the supplier’s jurisdiction. This is what they’re good at – information extraction!

Structured rules for decisions. Tax law is not creative. It’s a decision tree with many branches but clear logic. Once you have the facts, applying the rules should be deterministic. No creativity needed. No hallucination possible.

Transparent audit trails. Every decision needs to document why it was made. Which invoice fields were extracted. How the supplier was classified. Which rule determined the tax code. When the auditor asks, you need answers.

The key insight: don’t ask the LLM what the tax code should be. Ask it to extract the facts, then apply your rules. It’s not half as sexy as “our AI automatically handles everything.” But it works.

What this means for CFO offices and finance teams

A few practical conclusions:

You’re not getting replaced. The “AI will automate away accounting” takes are mostly written by people who’ve never closed a month-end.

Your job is changing. Less data entry, more oversight. Less manual matching, more exception handling. Less typing, more thinking. If you’re spending 60% of your time on tasks that could be automated, you should definitely talk AI.

You need to understand the tools. Not how to build an LLM from scratch (even this is super fun to do). But how they work, where they fail, what they can and can’t do. The finance leaders who thrive will be the ones who can evaluate AI vendors with real technical understanding.

Start with contained problems. Don’t try to “AI-enable the entire finance function.” Pick one painful process with clear success criteria. Invoice capture. Expense categorization. Intercompany matching. Get that working, learn from it, then expand.

The bottom line on AI in accounting

AI in accounting is real, useful, and overhyped all at the same time. The technology works for information extraction, pattern matching, and natural language interfaces. It doesn’t work—not safely—for unsupervised decision-making on anything with legal consequences.

The winning approach combines the interpretive power of LLMs with the precision of rule-based systems and the oversight of human experts. It’s less exciting than “fully autonomous AI accounting” but it’s what actually ships, actually works, and actually survives audits.